A Spotlight on Cognee: the memory engine for AI Agents

Cognee

If you’re keeping up with the newest graph technologies, like we are at G.V(), you may be closely following natural language integration. The question is this: how do we turn human-readable texts into usable data stores? One potential solution is to combine the robust information storage of knowledge graphs with the natural language capabilities of large-language models. Today’s featured software, Cognee, may have the answer!

Introduction

Who are Cognee? What do they do? In short, Cognee has developed a framework geared towards constructing AI memory. This means they turn your documents into a persistent memory layer, one that can be carried forward and queried across all language model queries.

Here at G.V() we’re really excited to explore what Cognee has available. For the technical buffs, the details of Cognee’s approach to parameter optimization are described in a paper available here. But we recommend you save the further reading until after the demo.

Today, we’re here to have some fun! We’ll create a sample knowledge graph, then see how we can perform a few sample queries and explore the results in G.V(). Since Cognee performs so well on natural language inputs, we’ve decided to trial it out on some classic literature. If you’d like to see a graph of Dickens’ literary devices, or the cetology (whale facts) of Moby Dick – keep on reading!

Getting set up with Cognee

Cognee have made it as easy as possible to get started, with both an installation guide and a quickstart guide. Installing Cognee is simply a matter of running the following:

uv pip install cognee

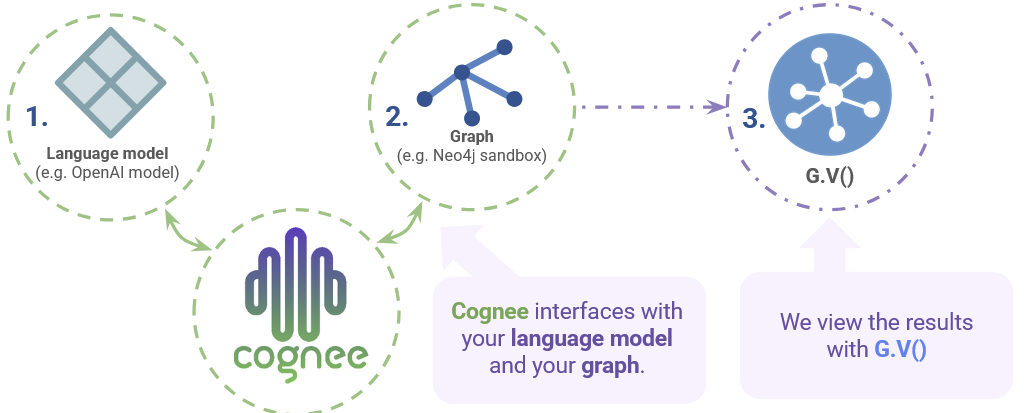

In addition to Cognee itself, you’ll need three more components to follow this demo.

- A language model

- A graph database

- G.V()

The overall layout of your set-up will look like this:

We’ll go through each of these in a little more detail. If you already have all of them set up and configured, you can skip ahead to the next section.

1. Language modelYou can either get Cognee set up with a paid LLM, or you can integrate it with a free, lightweight model, e.g. via Ollama. As detailed in the installation guide, you can set your corresponding API key in your environment where Cognee can retrieve it.

2. Graph database Cognee has instructions on how to link your graph to Kuzu, Neo4j or NetworkIX, as well as general database configuration information. By default, Cognee uses Kuzu as its graph database, LanceDB as its vector database and SQLite as its relation database. The best solution will depend on your application (and preferences) – , today we will be using a free Neo4j sandbox online and ask Cognee to store the graph there.

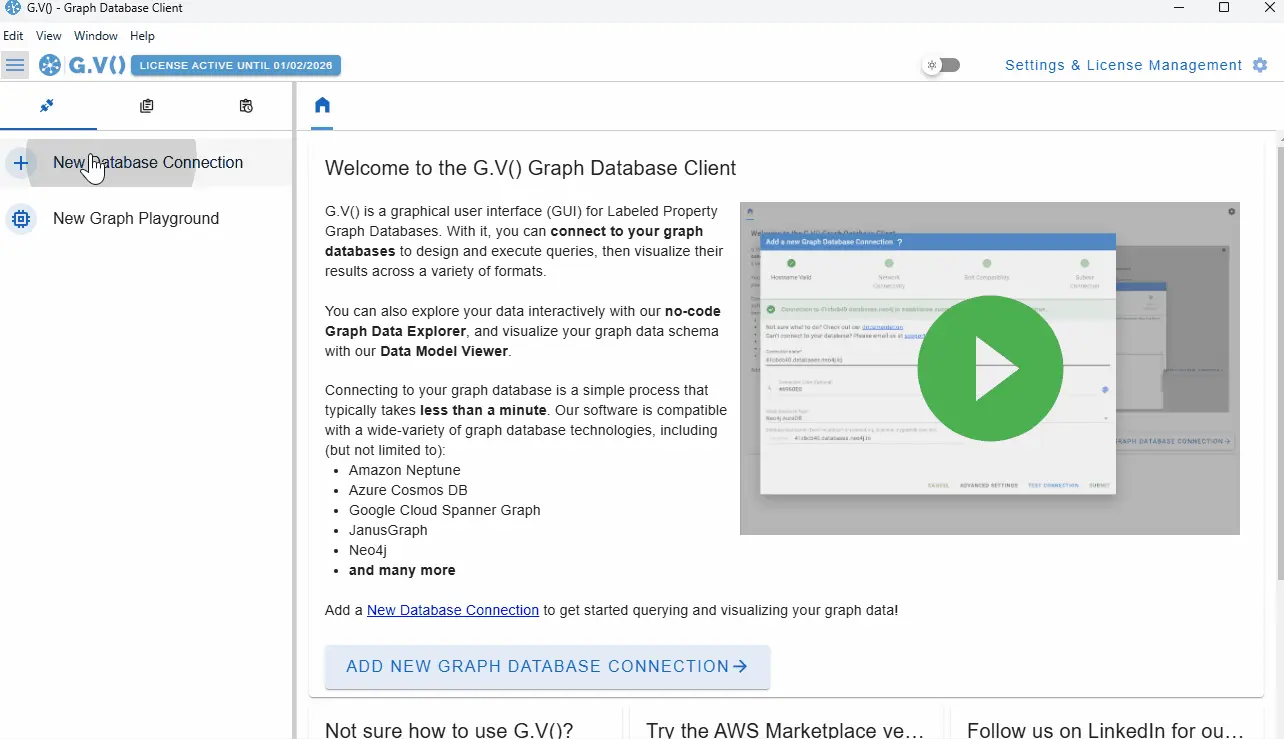

3. G.V() – You can get a copy of G.V() from the download portal. Connecting with G.V() allows you to instantly visualize your results and take advantage of our numerous customization options and no-code exploration. That means you can see exactly what Cognee is doing without any querying, and all our diagrams are created with G.V().

Connecting G.V() to your Neo4j instance (or any other compatible database!) is as easy as 1, 2… 3!

Cognify it!

The essence of Cognee can be boiled down into three primary functions:

cognee.add() The first step is to add your input files! These will form the foundation of your AI memory store, and form the basis of your source-of-truth.

cognee.cognify()

This step is the real magic ✨ of Cognee, and is where Cognee actually builds your AI memory store. ‘Cognifying’ means using your LLM to build a knowledge graph and vector embeddings as required, describing your input files.

cognee.search()

You’re now ready to actually use your knowledge graph! The search function is what you use to probe your database and extract information.

Basic example

Let’s take a look at the sample Cognee script given in the Quickstart tutorial.

import cognee

import asyncio

async def main():

# Create a clean slate for cognee -- reset data and system state

await cognee.prune.prune_data()

await cognee.prune.prune_system(metadata=True)

# Add sample content

text = "Frodo carried the One Ring to Mordor."

await cognee.add(text)

# Process with LLMs to build the knowledge graph

await cognee.cognify()

# Search the knowledge graph

results = await cognee.search(

query_text="What did Frodo do?"

)

# Print

for result in results:

print(result)

if __name__ == '__main__':

asyncio.run(main())

As we can see, this script includes all three of our critical stages! Add, Cognify and Search!

We’ve kept it fairly simple for now. We’ve just chosen to Add a simple text string, “Frodo carried the One Ring to Mordor.” The next step was to Cognify this into a knowledge graph. Finally, we used Search to run a sample query “What did Frodo do?” In return, we get an output:

“Frodo carried the One Ring to Mordor”

Cognee processed our data, and answered a question based on the data!

But what’s actually going on here?

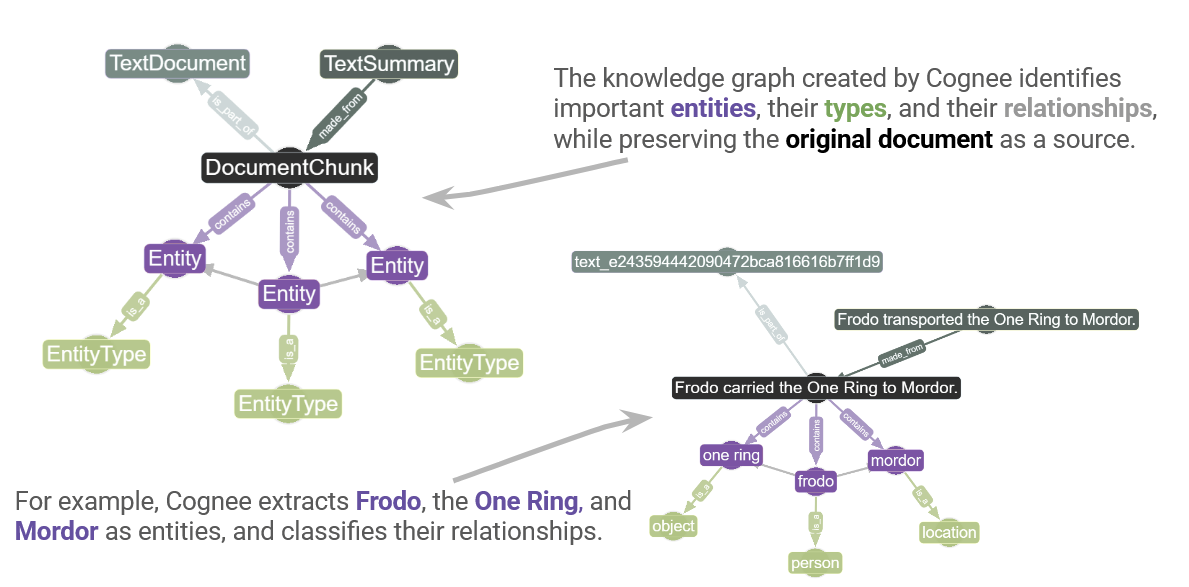

If we take a look at the graph created by Cognee in G.V(), we can catch a peek of what’s going on under the hood. We can see that our knowledge graph contains a mixture of the original source information and information inferred by the language model:

We can see that (as we might hope!) Cognee enforces strict structure on the nodes that represent our input text sources. Each node always relates back to a DocumentChunk, which is attributed to a TextDocument. In other words – inferred information is always linked to its original source in a regular, predictable way. This is how Cognee constructs its AI memory store. It means you can always return to your original documents for validation – you don’t need to trust the language model inference.

When it comes to relationship inference, however, the language model is now free to take some creative freedom. Cognee defines a custom schema on the fly to suit your data, using Entity and EntityType nodes. This represents the information from the input document in a way that is both (1) compatible with traditional graph query languages and (2) human-readable when visualized.

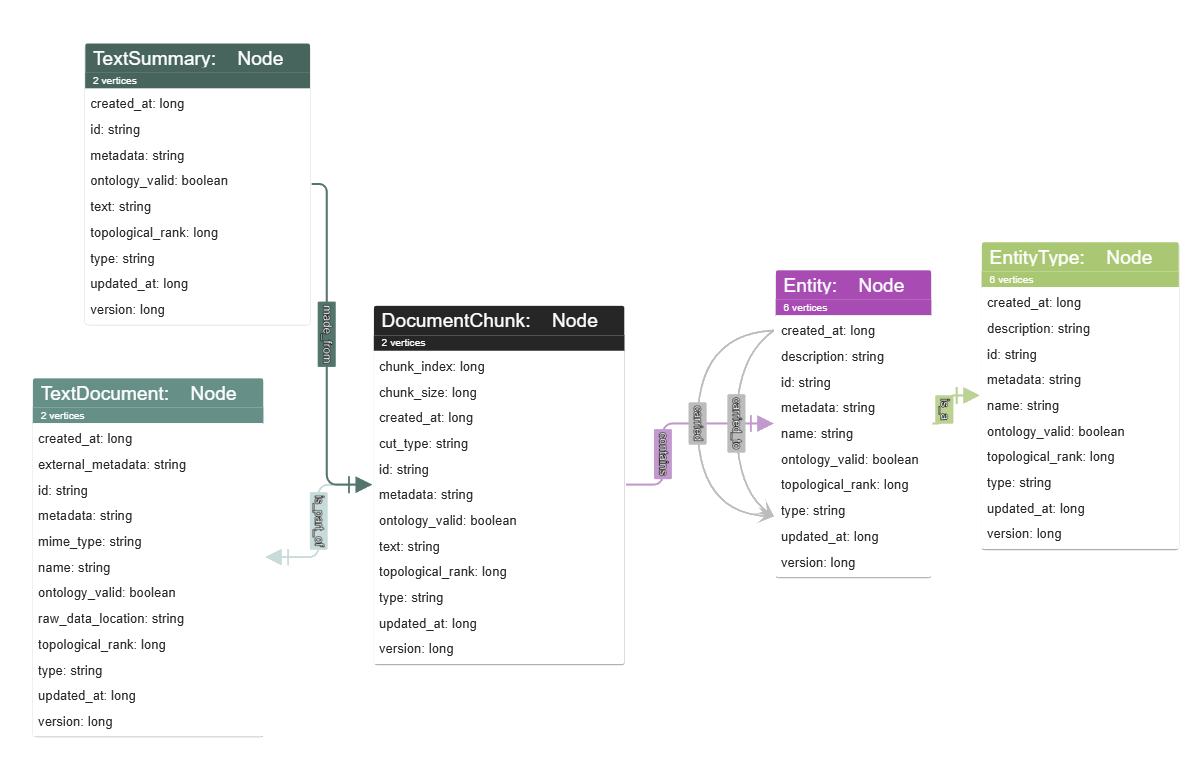

Within G.V(), we can also see this represented in the Data Model:

Graphing classic literature

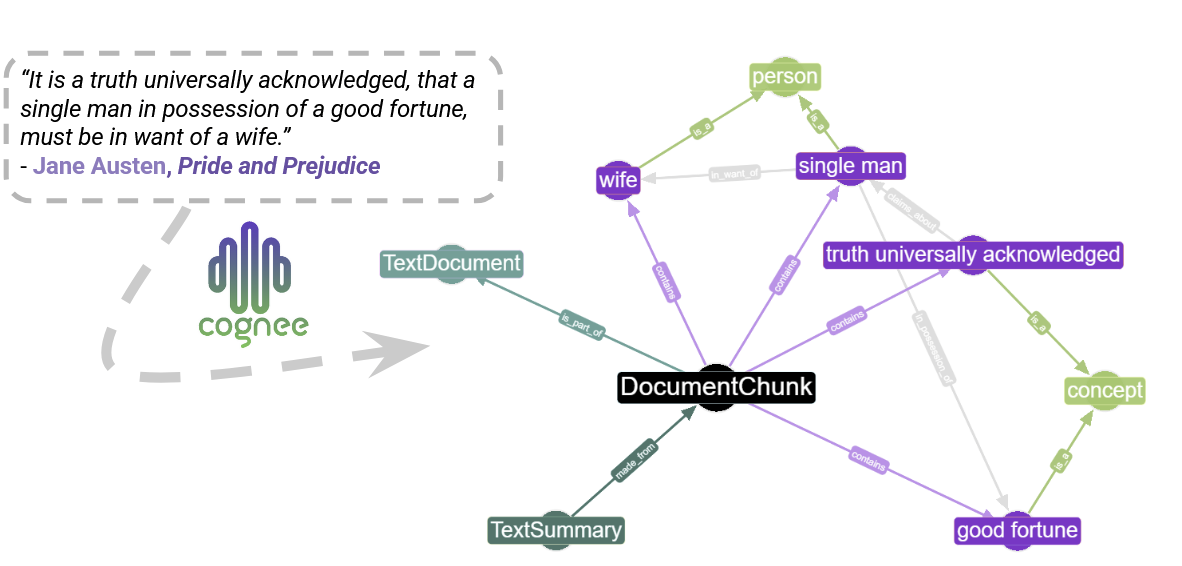

By Cognifying a few well-known passages from popular literature, we can get a feel for how Cognee constructs relationships between Entities in a flexible and dynamic way.

Let’s take a look at Cognifying the opening sentence of Pride and Prejudice:

Jane Austen suggests a set of straightforward relationships exist between entities such as ‘a single man,’ ‘a good fortune’ and ‘a wife’. Cognee had no problem taking a literal interpretation of this sentence, transforming nouns into nodes, and verbs into relationships to create a simple graph.

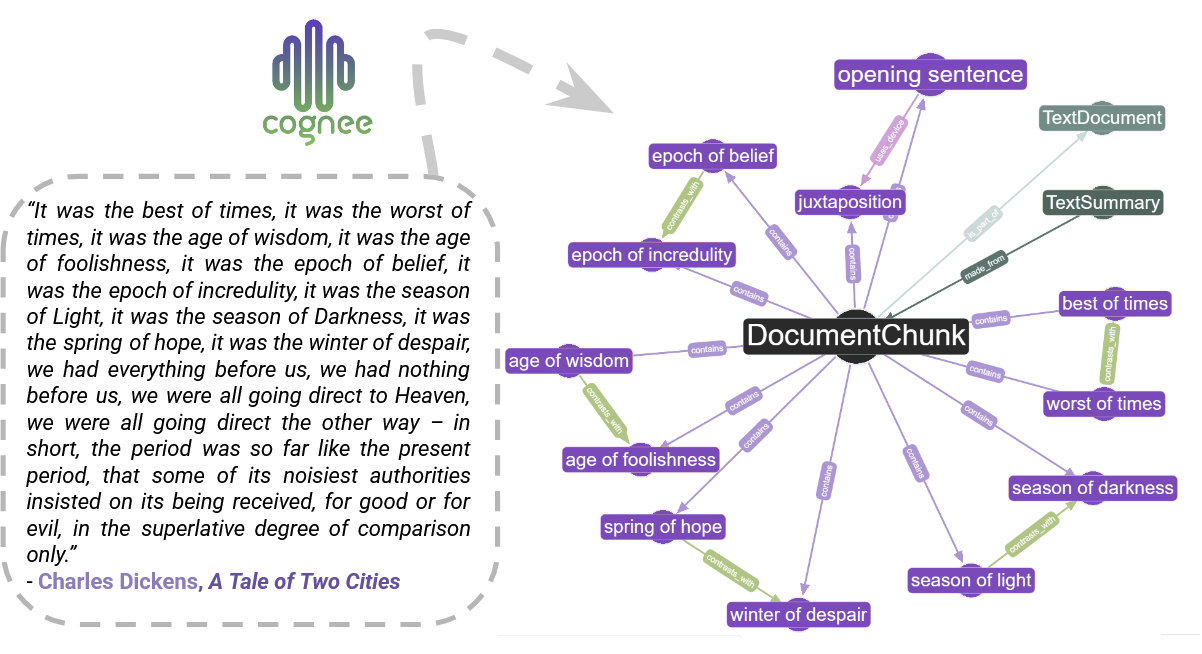

For a slightly different result, let’s try the opening sentence of A Tale of Two Cities:

If you recall your English classes, you might recall that this particular Dickens passage places a lot of emphasis on juxtaposition and repetition. Because of this, the Cognee output graph does as well! It looked for conceptual clauses (phases like ‘best of times’ and ‘worst of times’) and represented these as nodes, which it noticed could be related via the [contrasts_with] edge.

As you can imagine, this is an adaptable process that can result in a wide variety of graph structures to parse input documents. Part of the benefit of using Cognee in conjunction with G.V() is that you can see exactly how Cognee constructs these relationships right away – allowing you to further modify your data stores according to purpose. Importantly – the inferred information is always bound to the source information!

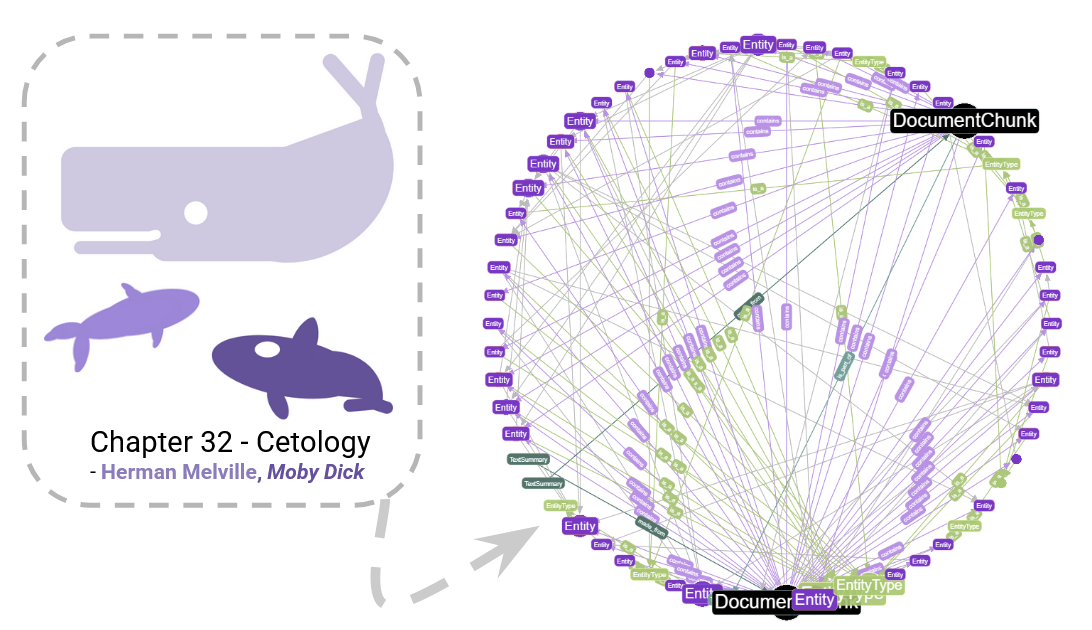

Querying Cetology

Of course, there aren’t many applications for a graph made from a single sentence alone. So if we want to see how we can use Cognee to construct and explore data, we could try Cognifying something more complicated.

Let’s try creating a graph using information from Chapter 32 – Cetology of Moby Dick. This is a section of the book where the plot pauses for a moment to instead discuss the behaviour and classification of whales – yes, it’s just an entire chapter about whale biology!

We didn’t choose this text by accident – in fact this chapter has a bit of a reputation for being technically dense and difficult to read. Since it’s somewhat hard to parse, let’s see if Cognee can help us out.

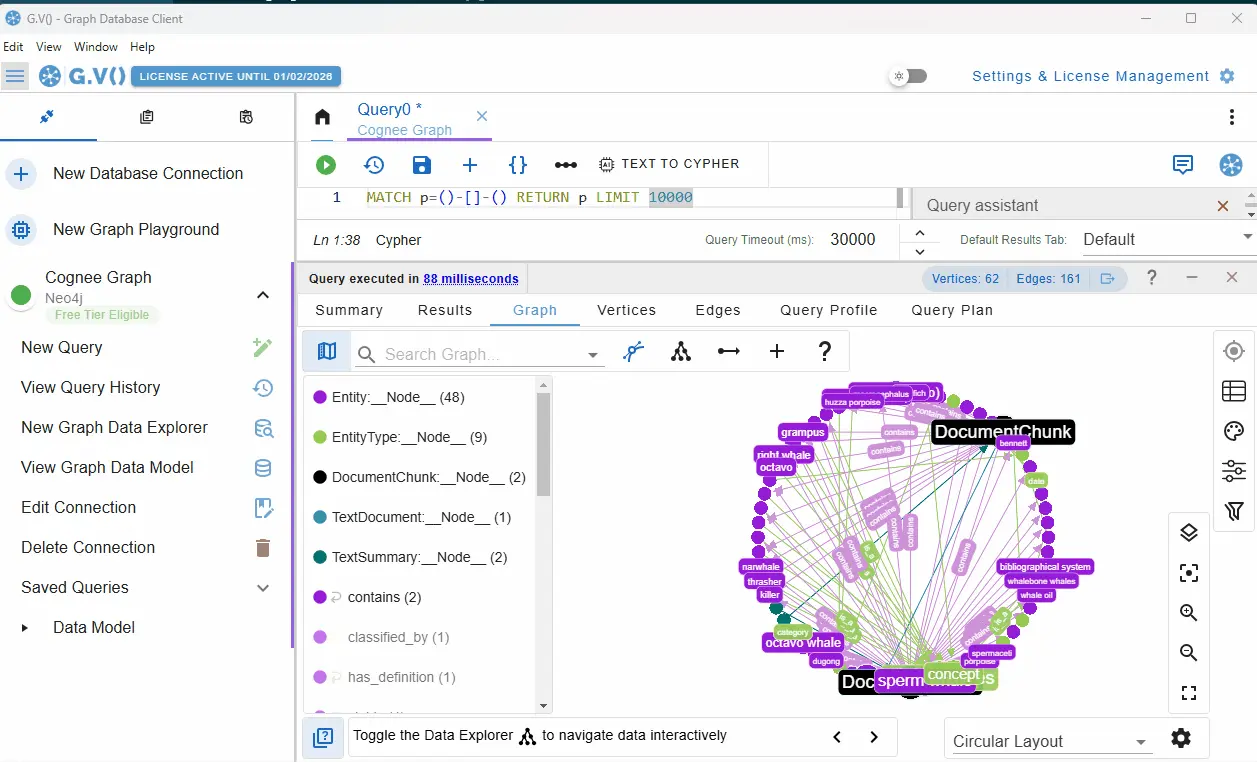

If we Cognify Chapter 32 – Cetology, we obtain the following graph:

This is somewhat larger and more complex than our previous graphs. Since it’s hard to see everything in this graph at a glance, we’d like to explore our data. To do this, we have three options:

1. Query in natural language (Cognee)

2. Use graph query language (Cognee or G.V())

3. Visually explore graph (G.V())

Let’s explore each of these options…

1. Query in natural language (Cognee)

The Cognee .search() function lets you use natural language text prompts to query the graph directly. For example, you can use cognee.search() to ask which whales are mentioned in Book II. Octavo:

results = await cognee.search(

query_text="Which whales are mentioned in Book II. Octavo?",

query_type=SearchType.GRAPH_COMPLETION,

)

print(results)

Which gives the result:

Book II (Octavo) mentions: Grampus; Black Fish; Narwhale; Thrasher; Killer.

You can customize the type of query you perform by checking out the Search Type documentation.

2. Use graph query language (Cognee or G.V())

Both Cognee and G.V() allow you to query your knowledge graph using the Cypher query language.

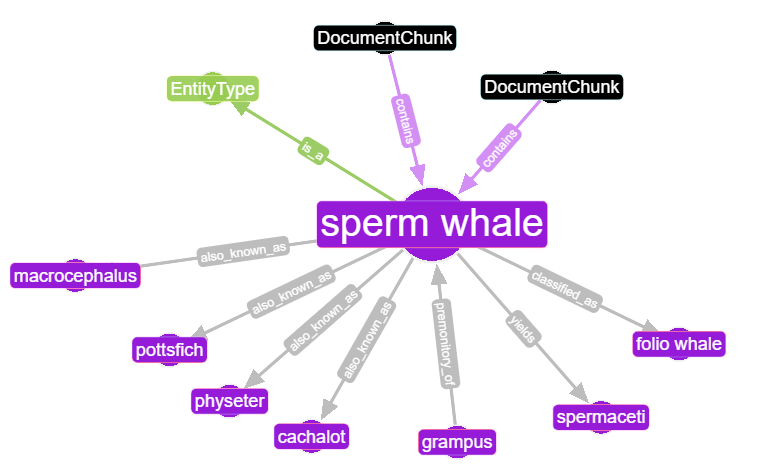

Since Moby Dick himself is a sperm whale, we can query for a sperm whale node and look for its nearest neighbours. You can do this in Cypher via either G.V(), Cognee or Neo4j with the following command:

MATCH p=(n:Entity:__Node__) - [] - ()

WHERE n.name = "sperm whale"

RETURN p LIMIT 100

If you run this through G.V(), you should see something like the result below. This illustrates all the first-degree information in the knowledge graph relating to sperm whales:

We can see at a glance that this simple query provides us with a wealth of information. We can see that sperm whales are also known as macrocephalus, pottsfich, physeter or cachalot, that it produces spermaceti, and that it is a type of folio whale. We can also see that the appearance of a grampus (another kind of whale) is sometimes seen as a premonition of a sperm whale!

3. Visually explore graph (G.V())

An alternative method is to use the Graph Data Explorer function in G.V(). This is a no-code way to explore graphs with smart filtering and auto-complete functionality.

See the GIF below, illustrating how you can use the Graph Data explorer to see what kinds of whale species are in our knowledge graph. You start by looking for the EntityType node with the name property ‘whalespecies.’ You’ll then be automatically prompted with the kinds of edges available to that node, and we can see that various Entity nodes are available that connect via the [is_a] edge.

Submit the query, and view the whale species mentioned in the passage: razor-back, black fish, thrasher, hump-back, sulphur-bottom, porpoise, right, killer, sperm, grampus, narwhal and fin-back!

Conclusion

Well there we have it! This has been a short (and hopefully fun!) exploration of how you can graph – and then query – just about anything with Cognee.

Play around and explore more of the possibilities, there’s really no limit to what you can explore. And remember, no matter how esoteric the application, Cognee knowledge graphs are always tightly tied to their source texts. Your original sources are always preserved and accessible.

With Cognee’s Memify Pipeline continuously enhancing your memory in the background – cleaning old data, adding associations, and weighting your favorite memories – your knowledge graphs actually get smarter over time! Plus, thanks to Self-Improving Memory Logic, every bit of feedback you give becomes part of the memory itself, making future answers even better. And Cognee’s new Time Awareness captures and reconciles temporal context, so you can focus on the insights instead of the timeline.

And whatever you decide to make – make sure to use G.V() to visualize and share your results! Whatever you make, let us know – we’d love to see what you get up to. You can get in touch directly with the Cognee team over on their Discord Server. Make sure to send them some love and if you’d like to contribute to Cognee, or simply star their repo, check out https://github.com/topoteretes/cognee