The Weekly Edge: RDF Edition! [14 November 2025]

![The Weekly Edge: RDF Edition! [14 November 2025] The Weekly Edge: RDF Edition! [14 November 2025]](https://gdotv.com/wp-content/uploads/2025/11/rdf-triplestore-sparql-weekly-edge-14-november-2025.png)

You don’t need another reason to SPARQL, but if you did, this is an excellent one: It’s time for the Weekly Edge, RDF Edition!

# Editor’s note: In light of G.V()’s big RDF announcement earlier this week (see below), this edition of the Weekly Edge is being guest edited by RDF legend Dr. Amir Hosseini. His deep domain knowledge and expertise are no match for Bryce’s bad jokes and referential humor, so we swapped him in. (Sorry, Bryce!)

What is the Weekly Edge? It’s like that one cool radio station in the city where you grew up that played the perfect mix of graph tech hits, deep cuts, and new releases that you’ll never forget – all DJed by the team at G.V().

This week’s track list is all about RDF triplestores and more:

- Hot new single: RDF support now available in G.V()

- Vibing ballad: Trends & analysis from ISWC 2025

- Catchy bop: The RDF & SPARQL 1.2 crash course

- Absolute banger: The ontology-driven Netflix Knowledge Graph

- Classic remix: LPG vs. RDF for building AI (feat. expert trio)

- Pure heat: Why OWL & SHACL aren’t enough for knowledge representation

I’m your DJ, Amir Hosseini, and you’re listening to 109.3 FM, the Weekly Edge. Here’s our first song:

[News:] RDF Support Is Now Available in G.V() !

If you haven’t heard the news already, then you’re in for a treat: your favourite graph IDE now supports RDF triplestores! Until this week, G.V() has only been compatible with LPGs, but as of today G.V() now fully supports both the RDF and LPG pillars of the graph tech industry.

This initial release has been fully tested and documented for six major RDF vendors, including:

If your RDF triplestore of choice isn’t on this list, worry not! It should still work with G.V(), but we just haven’t got around to testing it yet. Rest assured we’re already working on it!

This major release also includes SPARQL support within the G.V() Query Editor, with query profiling (for compatible vendors), smart autocomplete, and embedded documentation. All the standard G.V() features of interactive graph visualization, data model tracking, on-the-fly editing, and no-code data exploration now apply to RDF triplestores as well. Try it out and let us know what you think!

[IRL:] Trends, Happenings & Reflections from ISWC 2025

Last week, the main semantic web event of the year happened in Nara, Japan: the International Semantic Web Conference (ISWC) 2025. It’s like the Oscars, the World Cup, and Eurovision wrapped up in one event, but for academic discussions on semantic technologies. It’s sort of a big deal.

# I’ll publish a fuller analysis and break-down of everything that happened at ISWC early next week, but for now, here’s your tl;dr.

Of note, RDFox received the SWSA Ten-Year Award (pictured above), which could hardly be more symbolic. Ten years ago, RDFox – which incidentally is on our target list for G.V() support – pushed the boundaries of what an RDF store could achieve in terms of in-memory reasoning and incremental materialisation. Today, the fact that RDFox is being celebrated means the community has accepted that reasoning engines aren’t optional; they’re core infrastructure. High-throughput, parallel reasoning, and billions of triples in live systems are no longer research bragging rights but real operational expectations.

Overall, ISWC 2025 confirmed three converging trajectories: scalability, intelligence, and operationalisation. RDF stores and reasoning engines must now scale like databases; knowledge graphs must learn and interact like AI systems; and all of it must run reliably in production. The Semantic Web is no longer a research ideal – it’s the main infrastructure for gen AI over the next decade.

[Workshop/Slides:] An Introduction to RDF & SPARQL 1.2

Out of all the great talks and tutorials coming out of ISWC last week, this crash-course tutorial on RDF and SPARQL 1.2 is one you shouldn’t skip.

Organized by the W3C RDF & SPARQL Working Group, this tutorial walks you through the upcoming updates to the 1.2 standards. Moving beyond RDF 1.1/SPARQL 1.1 with triple-term support would mean allowing statements about statements, refined reifications, modified literal handling, and other smaller changes. Bringing those changes to the standard is challenging, because some knowledge bases (like Wikidata) are well-established with their own data models, and others have contradictory requirements sometimes!

Let’s hand this challenge over to the working group. If you’re working with linked data, SHACL/ShEx, and large knowledge graphs, this crash course gives you a forward-view of how modelling choices, query capabilities and tool-support may shift with the 1.2 standards. You can pre-consider impacts on your pipelines and schema designs rather than reacting later. Make sure you click through each of the presentation links to access the full slide decks!

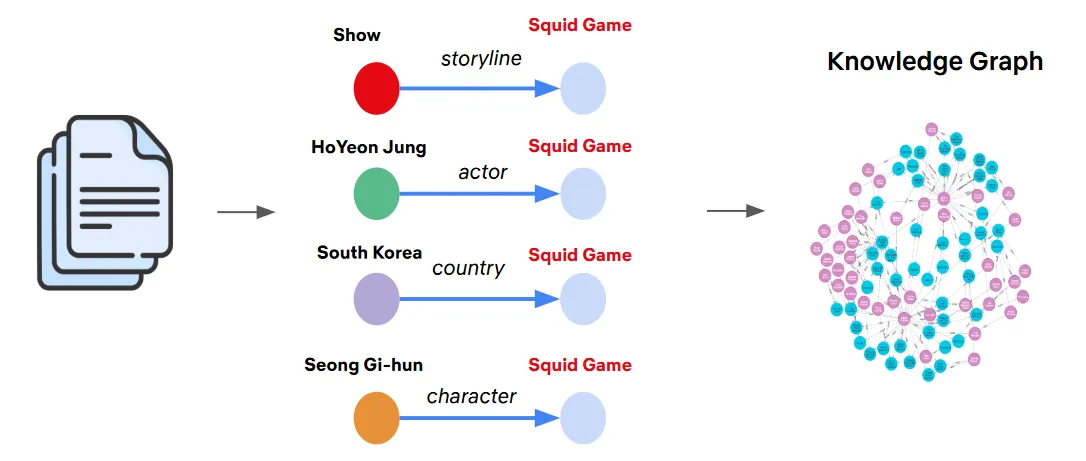

[Deep Read:] A Look Inside the Entertainment Knowledge Graph at Netflix

Netflix put the N in FAANG, so when they talk about their tech, people listen. In this week’s first deep read, author Himanshu Singh writes about how the Netflix team uses an ontology-driven, RDF-based architecture to power the Entertainment Knowledge Graph.

Given the bales upon bales of disparate datasets at an enterprise like Netflix, it only makes sense that they’d need to bring it all together into a cohesive whole. In turn, it’s no surprise that they’d choose to do that using a knowledge graph with rich semantic connectivity. Check out the article for a deep dive into all the details.

[Watch/ICYMI:] Which Is Better for AI: Labeled Property Graph or RDF Triplestore?

As the honorary Ms. Frizzle of Graphs, Dr. Ashleigh Faith is no stranger to educating folks on the differences between labeled property graphs (LPG) and RDF triplestores and when it’s appropriate to use each to achieve your specific goals. As her deep library of videos on YouTube demonstrates, she’s got the book smarts and the street cred to tackle the question with the insight it deserves.

In this week’s watch, she pulls two more experts into the LPG vs. RDF discussion with a lens on building AI. The resulting conversation feels sort of legendary with Dr. Jesús Barrasa (The Indiana Jones of Graph Databases) and Dean Allemang (The Professor X of RDF) joining Dr. Ashleigh Faith in discussing – and debating – the trade-offs of each technology and what’s the best tool for the job.

[Deep Read:] Beyond OWL & SHACL: Why Dependent Type Theory is the Future of Knowledge Representation

The title of this article alone feels like pulling the pin on an RDF grenade: dangerous, foolhardy, and certain to generate discussion on LinkedIn. In this week’s second deep read, Volodymyr Pavlyshyn argues that the OWL (Web Ontology Language) and SHACL (Shapes Constraint Language) query languages have outlived their usefulness when it comes to real-world knowledge representation. 🧨💥🫣

Instead, Pavlyshynn contends that the semantic web community needs to level up to Agda: a dependently typed functional programming language. Agda is better situated, argues Pavlyshynn, for more accurate graph models of knowledge representation, such as hypergraphs, metagraphs, and temporal metagraphs. Then, to be fair, Pavlyshynn points out the weaknesses and challenges facing widespread adoption of Agda. Whatever your thoughts or background in RDF, it’s a crackerjack read.

P.S. If you’re attending Connected Data London next week, be sure to catch up with us at the GraphGeeks mixer. 🐙 🥂

Got something you want to nominate for inclusion in a future edition of the Weekly Edge? Ping us on on X | Bluesky | LinkedIn or email weeklyedge@gdotv.com.

![Announcing Apache AGE Support, RDF Expansion, a Brand-New Interface, Updated Labels & More [v3.48.105 Release Notes] Announcing Apache AGE Support, RDF Expansion, a Brand-New Interface, Updated Labels & More [v3.48.105 Release Notes]](https://gdotv.com/wp-content/uploads/2025/12/apache-age-graph-extension-support-rdf-expansion-gdotv-release.png)